It’s the Operating Model, Not the AI Model: Cursor’s Near Real-Time RL Machine

Traditionally, most AI labs and companies develop in batch mode, relying on pre-collected or synthetic datasets and launching model updates only a handful of times per year. Cursor flipped the script by integrating near real-time RL (Reinforcement Learning) feedback directly into a closed-loop lifecycle. With every developer keystroke, suggestion acceptance, or rejection, Cursor streams live user signals into its training pipeline. Using policy gradient methods, their Tab model retrains and redeploys every 1.5 to 2 hours to all users.

The real advantage isn’t the math or algorithm behind Cursor’s Tab model suggestions. The secret sauce is in their business process: turning user interactions into fresh data points. Their operating model makes learning continuous and actionable. Cursor's built a feedback machine that improves developer experience in near-real time.

The 70% That Most AI PoCs Miss

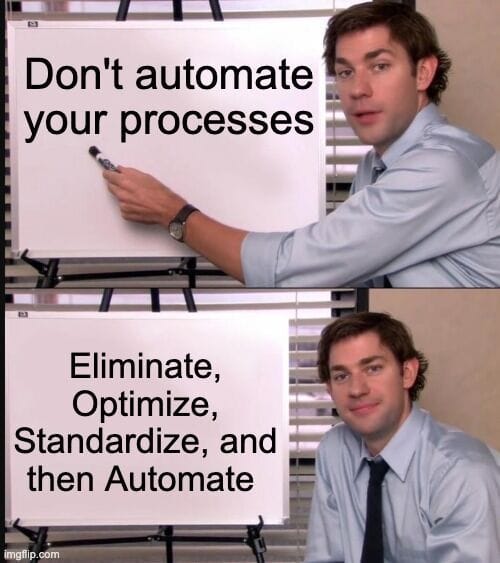

When companies chase AI value, they often fall into a "feature factory" mindset. Where success gets measured by volume and novelty of features shipped or a narrow focus on using GPT-5 or Gemini 2.5, when in total these choices represent only a fraction of the potential AI value locked inside the enterprise.

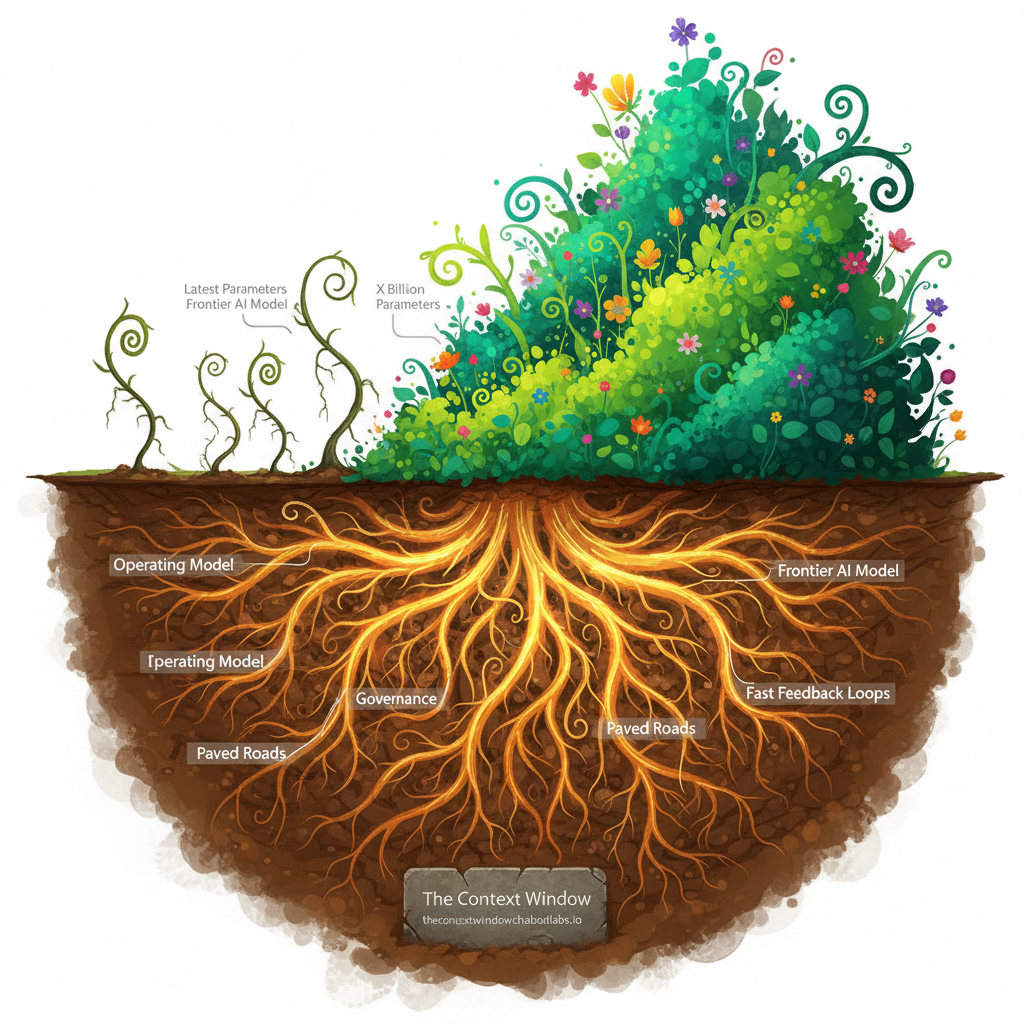

Real-world results tell a different story. Boston Consulting Group estimates that only about 10% of AI-driven business value comes from the model and another 20% from technology. The remaining 70% comes from transforming the underlying operating model. The current output-focused culture treats AI like another tool to be plugged into an existing machine, ignoring the arduous work of completely overhauling the underlying machine that drives business outputs.

AI Transformation programs aren't the same as your traditional ERP or CRM implementations. IT can't drag the business team begrudgingly along if they want to unlock the elusive 70% value bucket. Business teams need to be front and center, asking AI teams to partner in matching technologies with outcomes they're driving towards.

Cursor’s ability to ship updates multiple times a day is a prime example, they seem to understand how to unlock the AI value proposition so many companies can't seem to find.

Shipping in Hours, Not Quarters

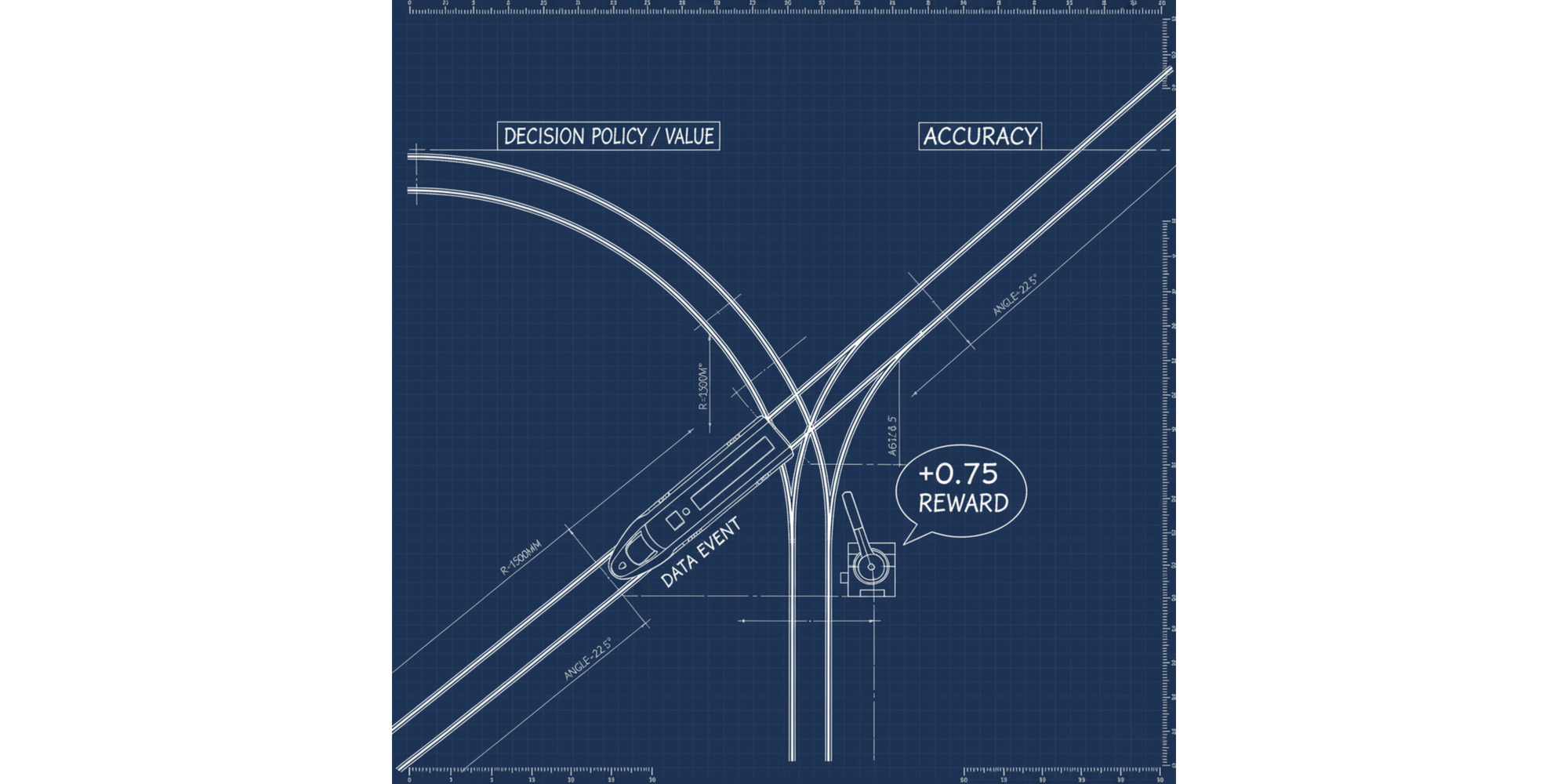

Cursor’s Tab model acts like a prediction engine, predicting what a developer might type next and offering a suggested completion. Every action is recorded as live feedback; accept, edit, or reject. Those signals stream into an RL pipeline, where the model updates continuously using real user behavior rather than synthetic data.

Each event feeds into the data pipeline, serving as a labeled data point to guide the model. Rewards guide learning (+0.75 for acceptance, –0.25 for a rejection), constantly recalibrating the model’s definition of "good". New checkpoints are fed from the pipeline to be trained, validated, and deployed within hours. Behind the scenes, telemetry systems process millions of events. This constantly feeds Cursor's ML-Ops teams a live pulse of how the tab model's performing, squaring the circle to give Cursor the analytics they need to monitor KPIs.

Instead of adding more parameters and bloating the model to chase accuracy, Cursor tunes its decision policy, helping the model learn what to suggest and when it should hold back.

When you read through the blog post, the Cursor team shared a number of different equations and how important deploying on policy is. While thats true, without redefining their model release process and identifying that the user clicks could serve as labeled data points they wouldn't have been able to take advantage of the policy-gradient theorem discussed in the post.

Rethinking AI Advantage

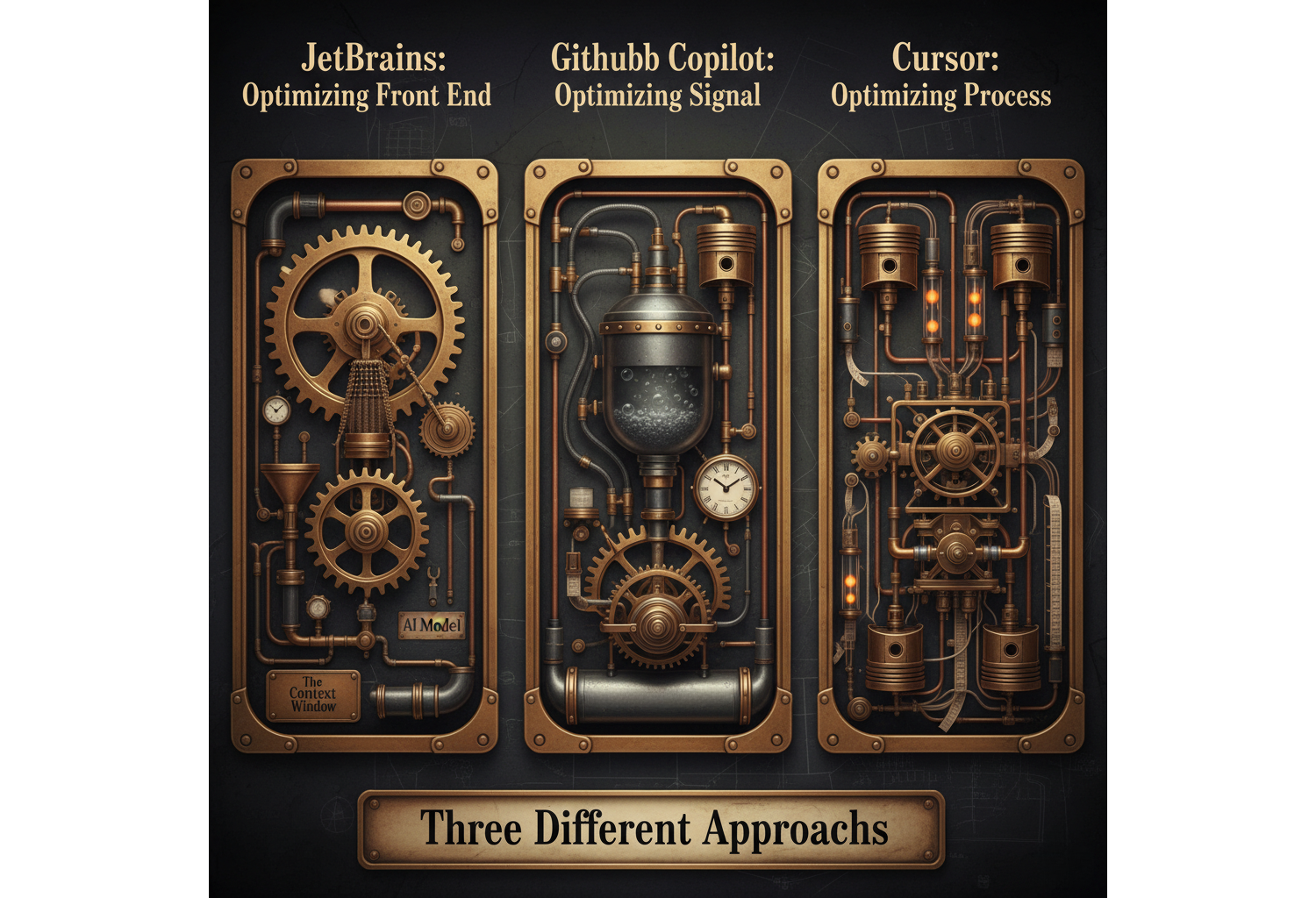

To understand why defining your operating model creates a defensible advantage, consider three companies developing AI coding assistants. Each sits at a different stage of maturity in operational design.

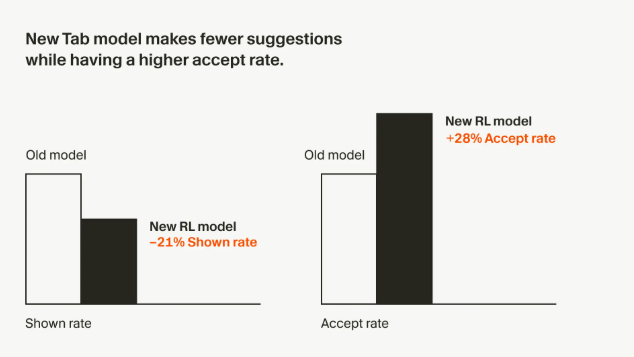

JetBrains: optimizing the front end.

JetBrains improved acceptance by adding a filter model that predicts whether a tab suggestion is likely to be accepted before showing it to an engineer. The result: roughly a 50% lift in acceptance. The approach is UX engineering that trims distraction but it’s limited to presentation-time logic. The system itself doesn’t learn faster; it just interrupts less. The process addresses UX concerns but leaves most of the value on the table and doesn't capture the value the processes data offers.

GitHub Copilot: optimizing the signal.

Copilot’s filter model takes a similar approach, skipping completions below a ~15% predicted acceptance probability threshold. This reduces low-quality suggestions and again smooths the UX for developers. While it's a step up from basic filtering it's learning loop still runs in large, offline batches, trained on aggregated telemetry months at a time. The problem here is the currency of the data, the user feedback is weeks or months old and may span multiple versions of their Tab model.

Cursor: optimizing the process.

Cursor’s Tab model operates like a living system. Each developer action of accept, skip, or reject is an on-policy signal that feeds into the RL loop. The model retrains and redeploys in 1.5–2 hour cycles, with feedback flowing straight from users into training data. Governance, telemetry, and release engineering all align around guardrails to provide paved paths for fast and safe deployments.

The takeaway:

JetBrains improved interface quality.

Copilot improved prediction accuracy.

Cursor redefined the process with data and AI at the center.

This kind of operational velocity doesn't happen by accident, it requires governance designed as an enabler, not a bottleneck.

Governance as a Competitive Advantage

Cursor’s operating model works because it has governance designed into the process, not an afterthought. Typically when enterprise leaders hear the word "Governance" the first thing that comes to mind is bureaucracy or a stop sign. What governance should mean is a defined system of boundaries, checks, and visibility that lets teams move quickly while staying aligned with standards and compliance – Paved Paths.

Cursor’s approach to training and deploying its Tab model shows what this can look like in practice. The rules about what data can be used, how updates are rolled out, and when to intervene need to be written into the operating contracts and agreements. Teams don’t need to wait for permission from a governance board, they work within the lanes that governance provides with the controls and validations already built in. This approach unlocks the governance team's ability to manage by exception – not by gate.

For enterprise leaders, data and AI governance should enable:

- Consistency: Every team works from the same playbook, good practices spread instead of reinventing themselves in every project or business function.

- Accountability: Each decision follows a paved path, allowing trust to scale with speed.

- Safety: Guardrails protect customer data, reputation, and brand integrity without the overhead of big reviews.

- Autonomy: Teams can experiment and deploy rapidly because they know the rules of engagement are already built in.

Sustainable AI Advantages Are Built On Process, Not Parameters

This wasn't the original article or takeaway I planned on writing. When first reading Cursor's blog post, "Improving Cursor Tab with online RL", I wanted to dig the technology they were using that the frontier AI labs haven't figured out. But the more I read about how the Tab model's RL pipeline worked and the more research I did, it became apparent that the competitive advantage (or "moat") Cursor built was because of the hard work put in to define the operating model and governance. They were able to unlock innovation that you can't pick up and simply drop into another organization, something that is incredibly hard to replicate and that delivers compounding value.

For executive leaders working to transform their business into an AI-first, AI-forward or whatever way you want to describe your journey, the lesson is: real transformation isn’t in upgrading to the latest and greatest model, it’s in upgrading how your organization learns, continually reimagines its operating model and how it gets shit done.

Written by JD Fetterly - Data PM @ Apple, Founder of ChatBotLabs.io, creator of GenerativeAIexplained.com, Technology Writer and AI Enthusiast.

**All opinions are my own and do not reflect those of anyone else.